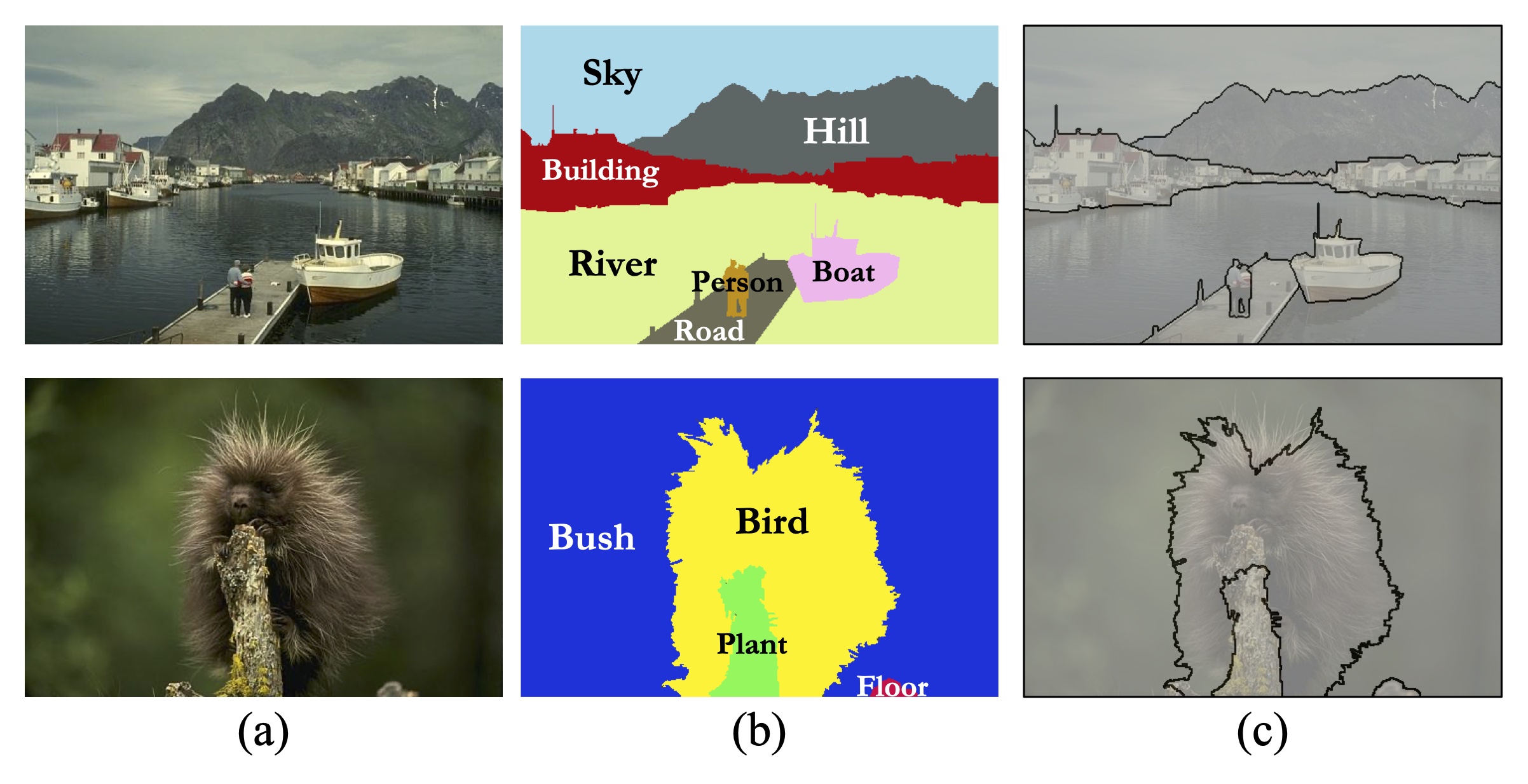

Figure 1: Example images and their semantic labeling and image segmentation results. Even if the semantic labels are not perfect, our pipeline could obtain satisfactory segmentation results.

Abstract

Image segmentation is known to be an ambiguous problem whose solution needs an integration of image and shape cues of various levels; using low-level information alone is often not sufficient for a segmentation algorithm to match human capability. Two recent trends are popular in this area: (1) low-level and mid-level cues are combined together in learning-based approaches to localize segmentation boundaries; (2) high-level vision tasks such as image labeling and object recognition are directly performed to obtain object boundaries. In this paper, we present an interesting observation that performs image segmentation in a reverse way, i.e., using a high-level semantic labeling approach to address a low-level segmentation problem, could be a proper solution. We perform semantic labeling on input images and derive segmentations from the labeling results. We adopt graph coloring theory to connect these two tasks and provide theoretical insights to our solution. This seemingly unusual way of doing image segmentation leads to surprisingly encouraging results, superior or comparable to those of the state-of-the-art image segmentation algorithms on multiple publicly available datasets.